By Giulia Dessì

Algorithms are an intangible, yet ubiquitous part of modern life. They are as invisible as they are all powerful, deciding which content search engines and social media platforms display, and which remains buried.

While many have criticized algorithms for everything from creating political echo chambers to radicalizing the far right, few have weaponized them as a tool for social change—until now. As a worldwide uprising for racial justice coincides with a global pandemic, many have turned to social media as a tool to safely support the movement, whether that is through educating their followers about social justice issues or mobilizing support for the activists on the ground.

“The more interaction and comments you get on a video, the more viral the video goes no matter what,” Ki, a US-based singer with more than two million followers on TikTok (where she posts videos as @eyeamki) told Media Diversity Institute.

However, it’s a double-edged sword. While online spaces and a lack of distractions have turned unprecedented attention towards the brutal killing of George Floyd, and engaged users about institutionalized racism, in many ways the same structural racism has coloured Black creators’ experiences using these platforms—specifically in the algorithm.

But can algorithms fight algorithms?

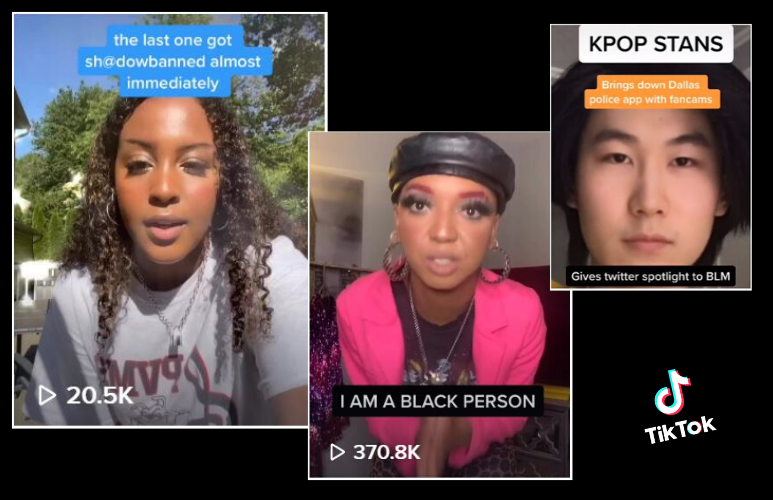

Over the past few weeks, Black TikTok users—like Ki—have encouraged their followers to “comment for the algorithm,” a protest tactic meant to boost Black creators’ content, who might have been side-lined by the algorithm, which has been criticized in the past reinforcing an anti-Black, ableist and classist bias.

While Black users have been complaining about this for months, the protests have focused more attention on how social media companies wield their power to suppress conversations about social justice and deprioritize them in their algorithms. It came to a head last month when the hashtags #BlackLivesMatter and #GeorgeFloyd appeared as blocked—something that TikTok blamed as a “glitch” in the system, that left several videos about racial justice with zero views.

On May 19th, TikTok users staged an online protest, changing their profile pictures to draw attention to the issue—low and behold, #BlackLivesMatter content is now thriving.

“I feel like TikTok is trying to gain back the trust of African-American creators,” Ki continued, sharing how she is experiencing the culture shift, as TikTok responded to the criticism. “They put me on their trending page the moment they changed their CEO, after the scandal.”

At the end of one of her videos, Ki posted “I AM A BLACK PERSON. ✊🏽 you will not silence us, comment for the algorithm.”

Still, not everyone creating videos to support the #BlackLivesMatter movement is benefiting from the algorithmic boost—a fact that speaks to the multi-faceted nature of the issue.

“I have had so many videos taken down for speaking out against racism and racial injustice”, said Iman, a 16 year old TikToker who posts as @theemuse.

“For example, I put up a video of this person who said that they are allowed to enslave black people and go out to buy black people and I basically told her that it was a disgusting joke and that black people are not for sale, and they allowed her to keep her video but my video got taken down.”

Iman asked for help from her followers to boost the algorithm of her videos, but she suspects that she has been shadow-banned, a practice that refers to social media platforms’ tendency to limit a creator’s content to just their followers or people that click on their profile.

It reinforces what University of California Los Angeles Professor Safiya Umoja Noble calls “algorithms of oppression”—her term for the way that algorithms reproduce the racist and sexist beliefs of programmers and wider society.

“They suppress fair representations or socially just representation of people in platforms that make discriminatory predictions that deny opportunities to people of colour, to poor people,” said Noble, who authored a book looking at how search engines reinforce structural inequalities.

The actual science of algorithms is kept as a trade secrete by the tech giants, but this does not mean they cannot be challenged, contested or even manipulated.

“It’s important that people experiment, we still don’t know what exactly has an effect,” said Julia Velkova, an Assistant Professor of Technology and Social Change at Linköping University in Sweden.

“Also algorithms change every day”

This kind of creative experimentation was on display recently when fans of the South Korean music genre K-Pop flooded would-be white supremacist hashtags on Twitter with images of their favourite singers. Some also claim that they reserved seats at the recent Trump rally in Tulsa, Oklahoma, creating images of a sparsely-packed stadium.

Supporting the #BlackLivesMatter protests doesn’t stop at TikTok, either. YouTubers have created monetised videos to raise funds for Black Lives Matter, encouraging viewers to stream the video with the adblocker off as a way to financially support racial justice organisations without paying any money or leaving the house.

But are activist interventions enough? Not according to Noble.

“We need better public policy to look at the harms of AI and algorithms that normalise injustice, that normalise differential outcomes that exacerbate inequality,” Noble concluded.

Perhaps the actions of activists seem inconsequential. But cumulatively, they represent a powerful collective struggle that challenges racial inequalities and fights for representation in a way that could demand systemic change.